Home » Posts tagged 'Livongo'

Tag Archives: Livongo

Peterson Center Kills the Diabetes Industry Dead

Last week the Peterson Health Technology Institute (PHTI, part of the Peterson Center on Healthcare) published the seminal report on the diabetes digital health industry, concluding that (with the clear exception of Virta, which we have also strongly endorsed) the minor health improvements claimed by Livongo, Omada and others nowhere near offset the substantial cost of these programs. To which we reply:

We, on the other hand, have known this since 2019. PHTI’s excuse, such as it is, is that it was formed in 2023. We’ll let it go this time…

The Likely Impact of the Findings

The report shows that digital health vendors (once again, with the exception of Virta, which emerged as the clear – and only – winner from this smackdown) are “not worth the cost.” We would strongly recommend reading it, or at least the summary in STATNews. It is quite comprehensive and the conclusion is well-supported by the evidence.

In the short run, the effect of this report should be Mercer renouncing its “strategic alliance” with Livongo (“revolutionizing the way we treat diabetes”) and returning the consulting fees it earned for recommending them to their paying clients. (Haha, good one, Al.)

This was a rookie mistake by Mercer in the first place. Not forming the “alliance,” but rather announcing it. The whole point of benefits consultants making side deals with vendors is to do it on the QT so clients don’t notice. Hence, I’m not saying Mercer should actually renounce Livongo and harm their business model. Just that they should pretend to.

In the long run, this report should signal the end of the digital diabetes industry, meaning Livongo, Omada, Vida and a couple I’ve never even heard of. The bottom line: private-sector employers using digital solutions for diabetes may be violating ERISA’s requirement that health programs benefit employees by being “properly administered.”

The Empire Better Not Fight Back

Inevitably, the well-funded diabetes industry will fight back against PHTI’s report and “challenge the data.” They’d be right in one respect: the data does need to be “challenged.” However, it’s for the opposite reason: PHTI went far too easy on these perps.

Here is what I would have added to the report, had they retained my services. (And I’d be less than honest if I didn’t admit I had hinted they should, but I think by then their budget was fully committed.) These points will inevitably come to light in the event of a “challenge.”

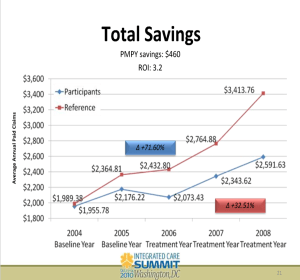

Second, matched controls are invalid because you can’t match state of mind. Ron Goetzel, the integrity-challenged leader of what Tom Emerick used to call the “Wellness Ignorati,” demonstrated that brilliantly, naturally by mistake. Take a looksee at what happens when you match would-be participants to non-participants – but without giving the former a program to participate in. The Incidental Economist piled on. And then the Wellness Ignorati tried to erase history, recognizing they had accidentally invalidated their entire business model. Diabetes is no different. There’s a reason the FDA doesn’t count studies that compare participants to non-participants, it turns out. PHTI accepted them as a control.

Third, along with sample bias there is investigator bias. Livongo’s main study was done by — get ready — Livongo. Along with some friends-and-relations from Eli Lilly and their consultants. PHTI assumed investigators were on the level. I’d direct them to Katherine Baicker’s two studies on the wellness industry. The first – whose “3.27-to-1 ROI” pretty much greenlit the wellness industry – was a meta-analysis of studies that were done – get ready – by the wellness industry. It has been cited 1545 times. The second, featuring Prof. Baicker’s own independently funded primary research, found exactly the opposite. It has been cited 16 times.

Don’t get us started on Livongo

Oops, make that a million and one.

Drawing undue attention to unwanted publicity has henceforth been termed The Streisand Effect. Right now this PHTI report is mostly of interest to the cognoscenti. Most of its customers won’t notice.

Why? Because what we say about wellness is likely also true here: “There are two kinds of people in the world. People who think diabetes digital health works, and people who have a connection to the internet.”

Was Livongo’s “peer-reviewed journal article” really just an ad?

Here’s how an ad gets published. It’s a two-step process. I will lay it out so that even the dumbest member of the media who somehow missed this the first time when they swooned over Livongo’s outcomes can understand it now:

- Your employees and their colleagues write it.

- You pay to have it published.

Now, let’s look at what Livongo just published, touting their own outcomes, to see how, if at all, it differs from an ad.

- Their employees and colleagues wrote it.

- We covered this last time we wrote about Livongo. The article was written by Livongo employees, assisted by Eli Lilly employees. (Eli Lilly funded the study.)

- They paid to have it published.

- We missed this the first time around. Our excuse is, so did quite literally everyone else who covered the story. And “covering stories” isn’t our Day Job. We aren’t journalists. We don’t even play them on TV. We’ve never even watched journalist shows on TV, unless you include Superman reruns. Livongo seems to have a lot in common with that show, transparency being their kryptonite.

The journal is called the Journal of Medical Economics. Sounds really prestigious, so points for that. Yet virtually no other journal article cites articles in this journal, giving it an Impact Factor south of 2. (New England Journal of Medicine gets a 70.) Turns out there’s a reason no one cites it. Here’s how you get published in it. You pay them money.

They would say, yes, but we got it peer-reviewed. To which I say, apparently you didn’t in any meaningful sense. A real peer reviewer would have found and questioned all the fallacies in their article, rather than rubber-stamp some very sketchy “findings,” which for convenience’ sake are all catalogued in one place.

There is nothing wrong with advertising your outcomes, as long as your ad is labeled as an ad. You often see airline magazines with entire sections advertising various cities, using articles and pictures. But they are always labeled as ads. If you don’t do this, there is always the slight possibility, however remote, that someone doesn’t do the research to figure out that in fact this publication was pay-to-play. If that were to happen, you might see a headline like this:

Whereas a more accurate headline might read: “Livongo Pays for an Article to Claim Its Product Works.”

Update January 3: Someone contacted me to say that the correct term for paid highly information advertising is “Sponsored content.” This term would apply perfectly to Livongo’s self-generated, self-published study. They should relabel it as such.

Are Livongo’s outcomes real?

Kudos to employers who have resisted the entreaties of their carriers to jump on the Livongo bandwagon. Resistance is not easy — carriers will pester employers endlessly because they get a nice commission every time an employer bites. As does Mercer, which has a “strategic alliance” with them, but I’m sure they disclose their financial arragement to their employer clients…

Instead, I would simply recommend waiting until Livongo answers these seven questions that they apparently can’t answer. Or they can, but choose not to. Not sure which inspires less confidence.

The references below mention a “study.”, By way of background, this study was conducted by Livongo’s employees, along with employees of its partnered diabetes supply company (Eli Lilly), which also funded the study. So there couldn’t possibly have been any conflict of interest, right? Right?

It was published in something called the Journal of Medical Economics (JME). And no, I hadn’t heard of this publication either. Turns out it’s an “open-access” journal offering “accelerated publication,” where you pay to publish. The Impact Factor is 1.9.

Not familiar with Impact Factors? Those measure the influence of a publication. For instance, the New England Journal of Medicine tallies a 70.8. How hard is it to only get a 1.9? Even the American Journal of Health Promotion (AJHP), which recently proposed charging employees for insurance by the pound, scratches out a 2.6. Possibly, that’s because AJHP does sprinkle more humor into its content than JME, like:

I guess that means 10 states both prohibit it and allow it.

Question #1 for Livongo

Even journals where companies pay to place their articles (need to do a little peer review (which itself is increasingly considered to be a joke), and this peer review was pretty basic: the authors were asked to disclose that the study couldn’t draw any causal relationship between the Livongo intervention and the results. The title of the article itself says that reduced medical spending is “associated with” their product. Later the authors say the results “imply” the product works.

So if the study showed only a correlation and not causation, why does Livongo’s press release announce:

“The findings showed that by using its remote digital health platform, the Livongo for Diabetes program delivered an $88 per member monthly reduction” ?

Question #2 for Livongo

Since these alleged findings are the opposite of Livongo’s initial claim below, featured in a recent Valid Points posting about diabetes vendors snookering purchasers, the second question is, how did the original 59% reduction claim (which basically requires eliminating every hospitalization not connected with births, trauma or cancer) get replaced by the opposite claim that large reductions in physician visits generate all the savings, while admissions increased?

A while back, I actually looked into the likelihood that tighter glycemic control, which itself is rather controversial, (“there is good evidence that intensive control of blood glucose increases patients’ relative risk of severe hypoglycemia by 30%”) could reduce hospitalizations. I used very optimistic assumptions (since I was consulting for a company making a glucometer with remote capabilities, not unlike the one Livongo pitches). Here’s what I came up with: savings of $27 per diabetic per year in inpatient admissions, as opposed to Livongo’s $88 per diabetic per month.

In all fairness to Livongo, they don’t promote that inpatient admissions result anymore (I may have missed the apology for fudging that outcome in the first place), focusing instead on getting doctors paid less money.

Seems curious that physicians would be doing more work – more notifications from remote monitoring devices about blood sugar, more titrating dosages – and be perfectly fine making 26% less money. Plus, every other wellness vendor brags about how many more physician visits they generate, not how many fewer.

Question #3 for Livongo

If I’m seeking a vendor to control diabetes in my population (assuming that is even possible on a broad scale), I would look for weight loss (without which diabetes reversal is basically impossible, but then again sustained weight loss itself is pretty close to impossible) as the leading indicator.

As an intermediate indicator, I would measure units of insulin use across the entire population. That should decline if people are eating much better and exercising much more and losing weight.

The end-point indicator would be a decrease in admissions for diabetes. Of course, since diabetes admissions generally increase following retirement, it isn’t exactly easy to save money in decreased admissions for diabetes, for the simple reason that there hardly are any.

Specifically, for the last year in which a full set is available (2014),158 million commercially insured <65 people generated 126,710 admissions for diabetes. Meaning that in the commercially insured population, the admission rate is so low that a 10% reduction in admissions (which has never been achieved in any population health program) would mean that an employer with 10,000 employees would avoid — get ready — 1 admission.

We suspect Livongo knows this because they listed the diabetes diagnosis codes in their appendix, and it takes about 5 minutes to tally the US admission rate for those codes using the federal database designed for that purpose. And if they don’t know it, they should. Any health services researcher should be aware of this database.

So why didn’t Livongo measure any of those three outcomes? Or did they measure them and decide not to report them?

I’m not sure which answer is “right”: While I would be very concerned if they were suppressing data, I think I would be even more concerned if they didn’t know enough about diabetes to measure the key outcomes.

Question 4 for Livongo

Why did Livongo measure participants against non-participants, when that study design is known to be completely invalid? Benchmarking a par-vs-non-par result has been done five times, including three times by wellness promoters hoping to prove validity of the design The conclusion in each case: 100% of difference in outcomes between the two groups is attributable to the study design, and 0% to the intervention. The design is 100% invalid.

There was actually a case in which the two groups were separated and “match-controlled” in 2004, but the program didn’t start until 2006. During the 2-year period following separation but before the program became available to participate in, the would-be participants nonetheless dramatically outperformed the non-participants…despite not having a program to participate in. (This result caused quite a ruckus in the wellness industry once they realized what they had done.)

Further, more than 15% of the initial Livongo participants dropped out. Assuming dropouts largely failed, aren’t the authors of the Journal article overstating the outcomes for the participant group as a whole by not counting dropouts? Isn’t that like on-time performance statistics not accounting for planes that crashed?

Question 5 for Livongo (really multiple questions)

Livongo offers “free unlimited glucose test strips” to try to reach a “target level of glucose control of Hb A1c <6.5%.”

It is not quite clear that either unlimited free strips or a 6.5% Hb A1c target are good for diabetics. Why, one might ask, does the American College of Physicians propose 7% to 8% instead of 6.5%? While it is true that the American Diabetes Association is sticking with its much lower blood sugar goal, isn’t it also the case that the ADA is largely funded by companies that make products to help lower blood sugar?

And what is the rationale for encouraging more use of glucose strips while Choosing Wisely recommends less testing for many Type 2 diabetics (in JAMA Internal Medicine, impact factor 20)? Exact words:

This recommendation is based on robust evidence, including a Cochrane review of 12 randomized clinical trials with more than 3000 patients, showing no statistical difference between patients who do not self-monitor their blood glucose multiple times per day and those who do self-monitor their blood glucose multiple times per day in glycemic control, nor evidence of effects on health-related quality of life, patient satisfaction, or decreased number of hypoglycemic episodes.

Question 6 for Livongo

Can you explain this passage?

The results also indicate significant reductions in hypercholesterolemia-related spending (2.8%), hypertension-related spending (5.3%), outpatient hospital spending (3.7%), and utilization in outpatient hospitals (1.3%) and office visits (5.6%).

Specifically, is there any literature whatsoever that says doing more blood sugar checks reduces spending on cholesterol and blood pressure drugs? Were you just “shopping” for retrospective correlations?

Why would “outpatient hospitals” decline? What do ambulatory surgery centers have to do with checking your blood sugar?

Question 7 for Livongo

Why are your Amazon and BBB reviews so bad?

One of the reviewers claims that a Livongo “tip” is: “Did you know that not getting enough sleep can make you cranky and out of sorts?” Is this true?

So it could be that this is just me, since their revenues are doubling every year. Maybe, like in the rest of wellness, answering questions about efficacy is beside the point. Maybe the point in HR is just to do something, regardless of whether it works, in case someone in the C-Suite asks. Or in the immortal words of the great philosopher Yogi Berra: “We don’t know where we’re going, but we’re making good time.”

Questions to ask Livongo before signing with them

In 2014, the Validation Institute, then a joint property of Intel and GE, tasked me, based on Why Nobody Believes the Numbers, to develop the most sophisticated outcomes measurement course ever devised. That was not a heavy lift, because at the time no other such course existed. They named it Advanced Critical Outcomes Report Analysis, or “Advanced CORA” for short.

Five years later, the course, now called CORA Pro, remains the Gold Standard in outcomes measurement expertise. CORA Pros — and there are only 8 of us — have developed a talent not just for sniffing out questionable claims (anyone can “challenge the data”), but more importantly for identifying contradictory, implausible and even impossible claims that somehow hadn’t been noticed before. Even a simple press release can become a case study in impossibility.

CORA certification itself is very useful, and takes you halfway there. But there’s something special about CORA Pro. Once you are certified in CORA Pro, you never look at a vendor outcomes report the same way again. For instance, consider Livongo’s recent glowing press release about its outcomes. Non-CORA Pros, like whoever these people are, basically just parrot a vendor’s results uncritically with a glowing headline. They sound like Flounder.

By contrast, a CORA Pro would review Livongo’s claims thoughtfully, and ask probing questions, first about the outcomes:

- If your goal is to reduce admissions for diabetes and reduce insulin usage across the population, why didn’t you measure admissions for diabetes, or insulin usage?

- Why does this report show the opposite of your report from 2018? This report showed huge reductions in outpatient and no significant change in inpatient, whereas the 2018 report showed huge reductions in inpatient with no significant change in outpatient?

Then a CORA Pro would ask whether Livongo may be harming employees:

- Why is your goal Hb A1c of 6.5% in conflict with the American College of Physicians and other expert bodies not funded by the diabetes industry, which advocate 7.0% to 8.0%? Perhaps they mean reaching 6.5% with diet-and-exercise, but if insulin use is going up, which is likely because they didn’t claim it was declining, clearly some employees aren’t getting the subtle nuance that they shouldn’t aim for 6.5% with medication.

- Why does Livongo push employees to check glucose multiple times a day when Choosing Wisely says most should do the opposite, to prevent harm?

Just askin’… Because that’s what CORA Pros do.

The full list of questions can be found here.

To learn about CORA certification, click here.