Home » 2024

Yearly Archives: 2024

GLP-1 Coverage: Dissuade the Curious and Educate the Serious

We are going to take time out from our usual policing of the perps in this field (and if you missed the last one, here it is) to show you something positive:

- you can cover weight loss drugs without breaking the bank

- while at the same time segmenting your population into those likely to fail and those likely to succeed on them

Yep, Quizzify’s Weight Loss Drug Companion Curriculum “dissuades the GLP-curious and educates the GLP-serious.” That is exactly what you want to do. After taking our 27-question Curb Their Enthusiasm introductory quiz covering the downsides of GLP-1s, only those who are very serious about improving their health will continue onto the drugs.

Here is a 5-minute read on how we do this, including our two favorite questions among those 27. If you took this quiz, would you want the drugs? I didn’t think so…

If you prefer the 3-minute version in video, try this https://www.youtube.com/watch?v=FeNQZGRpeNQ

Please put comments in Linkedin, as I now consolidate them all into one place. https://www.linkedin.com/posts/al-lewis-%F0%9F%87%BA%F0%9F%87%A6-57963_every-other-entity-involved-in-weight-loss-activity-7242511398851813376-1xOM?utm_source=share&utm_medium=member_desktop

Aon channels Britney Spears in Lyra report

An open (and also sent, received, read and unobjected to) letter to Aon’s chief actuary, Ron Ozminkowski.

Dear Mr. Ozminkowski,

It seems that there are always some rookie mistakes in your analyses. Either that, or you are simply “showing savings” because your clients are oxymoronically paying you as “independent actuaries” specifically to show savings. I will assume that your mistakes are just rookie mistakes, rather than deliberate misstatements. Yet as I recall, you never fixed your Accolade analysis after it was pointed out that your own assumptions, when correctly analyzed by someone whose IQ possesses that critical third digit, inexorably led to the opposite conclusion: Accolade loses money.

Perhaps that bug is a feature for your clients, and indeed your job description is to “show savings.” Mine is the opposite: to demonstrate integrity.

If I am wrong and you are genuinely trying to do the right thing, I would be happy to fly out there and teach you people how to do arithmetic, because, in the immortal words of the great philosopher Britney Speers, oops, you did it again. This time for Lyra. With all the money they paid you, it seems like they should be able to expect correct analysis. They might be very disappointed in you.

On the off-chance that you’d like to see what a real study design looks like in mental health, Acacia Clinics would be a good one to review. Here is the Validation Institute report and here is the science underpinning it.

If you are quite certain your arithmetic is correct despite all indications to the contrary, I would invite you to bet. I say that Acacia Clinics study design and analysis is correct and your study design and analysis is wrong. You say the opposite. Here are the rules for the bet. If you won’t bet, you are of course conceding that Acacia’s analysis is correct and your analysis, to use a technical biostatistical term, sucks.

I am already finding five rookie mistakes, and I’ve only read the first five pages.

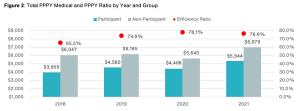

First take a looksee at this screenshot below. I was having a lot of trouble figuring out how the red dots showing something you’ve dubbed the “efficiency ratio” (a term which apparently has no meaning in health services research, as far as Google is concerned, while ChatGPT thinks it means something else altogether, but what do they know?) were related to the differences in the size of the bars. Then I realized you accidentally started the y-axis at $4000 instead of $0. A rookie mistake, which inadvertently makes the alleged savings look about 3 times higher than they are.

Meaning your so-called “efficiency ratio” is the value in the blue bar as a percentage of the gray bar, not the height of the blue bar as a percentage of the gray bar. Call me a traditionalist, but in my humble opinion those two ratios should be the same. (Note: apologies for the blurry screenshot. That’s how it’s reproducing.)

I did notice that later on, pretty much the same data in Figure 1 was reproduced as Figure 2, but this time you started the y-axis at $1000. So you’re definitely getting warmer!

Happy New Years!

Second, you may want to check your calendar, because it is now 2024. Your analysis ends at 2021. You’ve had almost two years and five full months plus a Leap Day to update it and yet, you cut it off in 2021. A cynic might conclude that you picked that end date because the alleged benefit you are claiming regresses further to the mean in 2022 and 2023.

Looks like you threw up in front of Dean Wormer

Third, speaking of regressing to the mean, the reason a cynic could infer that conclusion is, your so-called “efficiency ratio” already was regressing to the mean. Let’s assume, for now, the unassumable: that your “matched controls” are a legitimate study design. (If it were, the FDA would allow it.) Between 2018 and 2021, according to your own numbers on that chart, participant costs rose 31% while non-participant cost rose 22%. And yet somehow that statistic appears nowhere in your report, once again a rookie mistake.

Are you having connectivity issues?

Fourth, there are two types of outcomes researchers in our industry. Those who think “matched controls” are a valid study design for this kind of analysis, and those who have a connection to the internet. If you can’t afford my seminal book, try this article on the Validation Institute website which proves – using fifth-grade arithmetic – why that methodology doesn’t work. Period.

Perhaps the giveaway why “matched controls” don’t work in this case might be that the savings started on the first day of the baseline year. An employee has one phone call (yes, that was the cutoff point to get into the study group, though some people had many more) with one of Lyra’s “220,000 high-quality providers” and their medical spending drops precipitously. I’d also love to know what Lyra’s Secret Sauce is, that lets them retain 220,000 providers, all of whom are “high-quality.”

The following things change immediately as a result of that call, even though they are not part of the conversation and require a real doctor or in the case of ER visits, a great deal of luck:

- Non-mental health emergency visits plunge by 30%

- Generic drug scripts plunge by 30%

- By 2021, even expensive specialty meds fall by more than 20%

You might want to retain a smart person to explain the difference between correlation and causation. Alternatively, perhaps you are concerned that this meteor almost hit the visitors center?

A mystery wrapped inside a riddle wrapped inside a seven-figure consulting fee

Fifth, consider that Aon has data for:

- medical claims

- diagnoses

- professional mental health spending

- inpatient mental health spending

- outpatient mental health spending

- spending on non-mental health

- ED and inpatient visits

And consider that:

- They did this study for Lyra

- The study is called “Lyra Cost Efficiency [sic*] Results”

- The “Workforce Mental Health Program information was provided by Lyra Health”

Yet somehow – despite having the aforementioned two years, five months and a leap day to prepare this study – they claim to have absolutely no idea how much Lyra’s services cost:

We suspect it is a lot, perhaps enough that mental health professional fees with Lyra for participants exceed mental health spending by non-participants. Because in addition to having to pay their “evidence-based therapists” (Lyra’s term), sales, marketing, overhead and profit, Lyra needs to pay off the benefits consultants too, to “partner with” them:

Finally, where’s the guarantee of credibility? Does Aon not stand behind its work? I guess that’s a wise move on your part, because if you did, I’d be rich. By contrast, Acacia Clinics was validated by the Validation Institute (VI). They do stand behind their work, so the VI’s findings on Acacia Clinics’ outcomes are backed by a $100,000 Credility Guarantee. That, of course, is in addition to my own guarantee.

Did Mr. Ozminkowski just damage Lyra’s reputation…and Aon’s own?

The irony here is that Lyra is considered (or was considered, until this report) a perfectly legit vendor that is providing a valuable service of connecting employees to mental health professionals that match their needs. That is especially useful these days, when mental health benefits are very skinny and mental health providers are hard to come by. The “ROI” is employee appreciation, and possibly higher productivity. Not magical reductions in medical spending completely unrelated to the issues they are calling about.

Paying off consultants (who coincidentally also send them business) to pretend otherwise could damage that reputation. A rookie mistake on their part.

Further, there are some really smart, really honest consultants at Aon. But just like one dirty McDonalds would sully all of them, organization as a whole suffers when one consultant goes rogue.

*It’s either “efficiency,” meaning the cost vs. the benefit, or “cost-effectiveness.” “Cost efficiency” is redundant. They really shouldn’t need me to tell them that – or, for that matter, anything else in their report.

A lesson in valid measurement: Vida vs. Virta

The Peterson Health Technology Institute (PHTI) recently published an exhaustive study demonstrating what we’ve been saying (and guaranteeing) all along: Virta is unique among diabetes vendors in that it actually has an impact, while digital diabetes vendors don’t. (Diathrive, a diabetes supply company, also saves money but supplies are a different diabetes category.)

PHTI did a great job as far as the report went. And Livongo and Omada have accepted their conclusions. By “accepting their conclusions,” I mean declining my bet. Sure, they did some perfunctory whining about not looking at the data the right way yada yada yada in one of those “he said-she said” articles, but at this point everyone (at least everyone with a connection to the internet) knows they lose money.

That brings us to Vida. Until now Vida has flown under the radar screen, but the sound-alike name gives them the opportunity to be confused with Virta. That strategy was coupled with a brilliant two-part strategy regarding the PHTI report:

- Don’t submit any studies to PHTI

- Complain that PHTI didn’t look at their studies.

To the first point, PHTI lists cooperating vendors who submitted articles. (Virta submitted 12 to Vida’s 0.) To the second point, that aforementioned “he said-she said” article notes:

Vida says its diabetes programs are clinically proven to [reduce Hb A1c], as reflected in its body of peer-reviewed publications and the satisfaction of the “tens of thousands of members” who have found success with its diabetes program.

Not to be too semantic here on the latter point, but of course the people “who have found success” with your program will be satisfied. The question is what percentage have “found success.” In their case, according to this article, the percentage who dropped out or were lost to followup or didn’t complete their Hb a1c tests was…hmmm…I can’t seem to find it. Am I missing something here?

Results: Participants with HbA1c ≥ 8.0% at baseline (n=1023) demonstrated a decrease in HbA1c of -1.37 points between baseline (mean: 9.84, SD: 1.64) and follow-up (mean: 8.47, SD: 1.77, p<0.001) . Additionally, we observed a decrease in HbA1c of -1.94 points between baseline (mean: 10.77, SD: 1.48) and follow-up (mean: 8.83, SD: 1.94, p<0.001) among participants with HbA1c ≥ 9.0% (n = 618) .

Bookmark this paragraph because we will be coming back to it. Turns out one could teach an entire class based on this paragraph alone, comparing Vida to Virta. And Part 2 will do exactly that.

Peer Review

Here is the Validation Institute’s summary of so-called “peer-reviewed publications,” in Part 5 of their 9-part series on validity:

Vendors have realized that prospects consider the phrase “peer-reviewed” to settle all debates about legitimacy. Part Five will take you inside the thriving peer-reviewed journal industry to show you how peer reviews are bought and sold.

Often, vendors will brag about being peer-reviewed. Most prospects of vendors will then assume that the data was carefully vetted and reviewed by independent highly qualified third parties before seeing the light of publication because, after all, no journal would ever publish an article that was obviously flawed, right?

Well, certainly not for free.

Indeed, probably 95% of journals have turned article submission into a profit center. The euphemism for this business model is “open access.” Open access means that instead of the subscriber paying to read these journals, the author pays to publish in them. In other words, vendors are placing ads. Livongo at least had the good sense to at least pretend their journal was real, by buying some space in something called the Journal of Medical Economics, which sounds pretty legit, right? Not open-access, right? Um…

Well, Vida didn’t even bother to pretend it wasn’t open-access when they placed an ad (technically called “sponsored content”) in JMIR Formative Research. It actually includes the word “open” in the logo…

…and lest there be any doubt about where their vig comes from, JMIR even publishes their price list, which they call an “article processing fee.”

Also, have you ever heard the phrase “investigator bias“? Well, here is the list of authors and funders. Notice a pattern?

G. Zimmermann: Employee; Vida Health. A. Venkatesan: Employee; Vida Health. K. Rawlings: Employee; Vida Health. R. S. Frank: Employee; Vida Health. C. Edwards: Employee; Vida Health.

Vida Health

Like a real journalist, I reached out to Vida to ask for comments. Here’s what they wrote back:

- We aren’t submitting any comments

- But then we will complain that you didn’t look at our comments

Haha, good one, Al. Actually they didn’t say the second but wouldn’t it be funny if they do?

Official Rules for Diathrive $100,000 Challenge

Congratulations to Diathrive Health for achieving validation from the Validation Institute (VI). That itself comes with a $50,000 Credibility Guarantee.

In rare cases of VI-validated organizations – such as Virta Health or Sera Prognostics or Acacia Clinics – I add my own “Best in Show” guarantee and offer a $100,000 reward for a successful challenge.

Any diabetes vendor mentioned in the Peterson Health Technology Institute report (other than Virta, which “won” in that report for its diet/coaching offering, which is not at all competitive with Diathrive) can challenge my statement that Diathrive has better and more validly measured savings than they do on the cost of supplies from a vendor that also incliudes coaching support.

Rosencare, based on the industry-leading results at Rosen Hotels, has achieved excellent results overall with Diathrive, but the specific cash-on-cash savings that are guaranteed would be actual diabetic supply prices offered by a company that also provides coaching.

Here are the terms to earn the $100,000 reward.

Terms and Conditions of Challenge

Selection of Judges

There will be five judges, selected as follows:

- Each side gets to appoint one, drawn from members as of 4/17/24 of The Healthcare Hackers listserve with 1300 people on it, from all walks of healthcare.

- Two others are appointed objectively. That will be whichever health services researchers/health economists are the most influential at the time the reward is claimed. “Most influential” will be measured by a formula: the highest ratio of Twitter followers/Twitter following, with a minimum of 15,000 followers.

- Those four judges will agree on the fifth.

Using the criteria below, judging will be based on validity of the measurement. Measurements deemed invalid, such as those described on the Validation Institute site, are a disqualifying factor the challenger.

Written submissions

Each side submits up to 1000 words and five graphs, supported by as many as 10 links; the material linked must pre-date this posting to discourage either side from creating linked material specifically for this contest.

Publicly available materials from the lay media or blogs or the Validation Institute may be used, as well as from any academic journal that is not open-access.

Each party may separately cite previous invalidating mistakes made by the other party that might speak to the credibility of the other party. (There is no limit on those.)

If a challenger is “validated” by a third party whose alleged outcomes have been invalidated on this site in the past, those other invalidations may be presented to the judges to impeach the credibility of this alleged validation.

Oral arguments

The judges may rule solely on the basis of the written submissions. If not, the parties will convene online for a 2-hour recorded virtual presentation featuring 10-minute opening statements, in which as many as 10 slides are allowed. Time limits are:

- 30-minute cross-examinations with follow-up questions and no limitations on subject matter;

- 50 minutes in which judges control the agenda and may ask questions of either party based on either the oral or the written submissions;

- Five-minute closing statements.

Entry process

The entry process is:

- Challenger and TheySaidWhat deposit into escrow the amount each is at risk for ($10k for the Challenger, and $100k for TheySaidWhat). Each party forwards $10,000 to the judges as well, as an estimate of their combined fees and/or contributions to their designated nonprofits.

- If the Challenger or Service Provider pulls out after publicly announcing an application, the fee is three times the amount deposited.

- The escrow is distributed to the winner and the judges’ fees paid by the winner are returned by the judges to the winner, while the judges keep the losers’ fees.

Peterson Center Kills the Diabetes Industry Dead

Last week the Peterson Health Technology Institute (PHTI, part of the Peterson Center on Healthcare) published the seminal report on the diabetes digital health industry, concluding that (with the clear exception of Virta, which we have also strongly endorsed) the minor health improvements claimed by Livongo, Omada and others nowhere near offset the substantial cost of these programs. To which we reply:

We, on the other hand, have known this since 2019. PHTI’s excuse, such as it is, is that it was formed in 2023. We’ll let it go this time…

The Likely Impact of the Findings

The report shows that digital health vendors (once again, with the exception of Virta, which emerged as the clear – and only – winner from this smackdown) are “not worth the cost.” We would strongly recommend reading it, or at least the summary in STATNews. It is quite comprehensive and the conclusion is well-supported by the evidence.

In the short run, the effect of this report should be Mercer renouncing its “strategic alliance” with Livongo (“revolutionizing the way we treat diabetes”) and returning the consulting fees it earned for recommending them to their paying clients. (Haha, good one, Al.)

This was a rookie mistake by Mercer in the first place. Not forming the “alliance,” but rather announcing it. The whole point of benefits consultants making side deals with vendors is to do it on the QT so clients don’t notice. Hence, I’m not saying Mercer should actually renounce Livongo and harm their business model. Just that they should pretend to.

In the long run, this report should signal the end of the digital diabetes industry, meaning Livongo, Omada, Vida and a couple I’ve never even heard of. The bottom line: private-sector employers using digital solutions for diabetes may be violating ERISA’s requirement that health programs benefit employees by being “properly administered.”

The Empire Better Not Fight Back

Inevitably, the well-funded diabetes industry will fight back against PHTI’s report and “challenge the data.” They’d be right in one respect: the data does need to be “challenged.” However, it’s for the opposite reason: PHTI went far too easy on these perps.

Here is what I would have added to the report, had they retained my services. (And I’d be less than honest if I didn’t admit I had hinted they should, but I think by then their budget was fully committed.) These points will inevitably come to light in the event of a “challenge.”

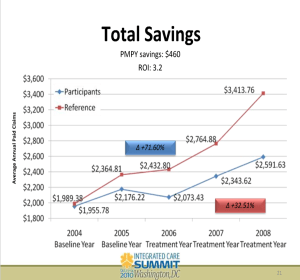

Second, matched controls are invalid because you can’t match state of mind. Ron Goetzel, the integrity-challenged leader of what Tom Emerick used to call the “Wellness Ignorati,” demonstrated that brilliantly, naturally by mistake. Take a looksee at what happens when you match would-be participants to non-participants – but without giving the former a program to participate in. The Incidental Economist piled on. And then the Wellness Ignorati tried to erase history, recognizing they had accidentally invalidated their entire business model. Diabetes is no different. There’s a reason the FDA doesn’t count studies that compare participants to non-participants, it turns out. PHTI accepted them as a control.

Third, along with sample bias there is investigator bias. Livongo’s main study was done by — get ready — Livongo. Along with some friends-and-relations from Eli Lilly and their consultants. PHTI assumed investigators were on the level. I’d direct them to Katherine Baicker’s two studies on the wellness industry. The first – whose “3.27-to-1 ROI” pretty much greenlit the wellness industry – was a meta-analysis of studies that were done – get ready – by the wellness industry. It has been cited 1545 times. The second, featuring Prof. Baicker’s own independently funded primary research, found exactly the opposite. It has been cited 16 times.

Don’t get us started on Livongo

Oops, make that a million and one.

Drawing undue attention to unwanted publicity has henceforth been termed The Streisand Effect. Right now this PHTI report is mostly of interest to the cognoscenti. Most of its customers won’t notice.

Why? Because what we say about wellness is likely also true here: “There are two kinds of people in the world. People who think diabetes digital health works, and people who have a connection to the internet.”

How Quizzify prevented another hospitalization – my own

For the second time in four years, Quizzify saved me a world of hurt. This time around was thanks to our Doctor Visit PrepKits, our companion app to Classic Quizzify, our healthcare trivia quizzes.

While the quizzes teach health literacy between clinical visits, the PrepKits teach health literacy specifically for clinical visits. You just enter a keyword, like a symptom, and the PrepKits tell you what you need to know, how to prepare for your visit, questions to ask the doctor and much more.

You might say “Well, our employees can just google on symptoms or ask their doctor.” Unfortunately, if I had just googled on “Swollen calf,” the first hit would be “no cause for concern.” (Try it.) And my doctor was quite convinced the PrepKits’ take on “swollen calf” was rather alarmist, but agreed to see me anyway.

Bottom line: Absent Quizzify2Go’s admittedly alarmist but nonetheless quite accurate advice that I should seek care urgently, I would have ended up in the hospital, with a procedure and a six-month recovery.

See how Quizzify once again saved the day…and turned a likely tale of woe into a minor inconvenience.

https://www.quizzify.com/post/how-quizzify-may-have-saved-my-life-again

And then imagine how many of your employees might have a similar experience. All you need is one, to pay for all of Quizzify.

PS Please put comments on Linkedin – I’ve migrated all the comments there.

Those Nutrition Facts labels are, to use a technical term, crap.

There are precisely 3 pieces of useful information on these labels. Everything else ranges from useless to misleading.

And yet how often do you read those labels when selecting a product? And do you ever not believe the information on them? Well, it’s time to start reading these labels critically.

The food companies are “teaching to the test.” They are maximizing the perception that their products are healthy, instead of actually trying to create healthy products. Often the two goals are at odds with each other.

Please do not comment here. I’m taking all comments on Linkedin. This link takes you there, and then if you are still interested, you can link to the full blog post.

Oprah’s on Ozempic! Here’s how much that will cost you.

For better or worse, Oprah has been American’s biggest weight loss influencer since the liquid diet fad in the late 1980s. [SPOILER ALERT: That diet didn’t work.]

And now she’s all in for Ozempic. Of course, her regimen also includes significant exercise and presumably enough means to afford a healthy diet, but those nuances will likely be lost on your employees jumping on the GLP-1 bandwagon in hopes of a magic bullet.

Magic bullet or not, never before in the history of healthcare has anything – any drug, procedure, test, anything – combined this much effectiveness, popularity…and cost. Indeed, this single class of drugs will likely add 10 basis points to the overall US inflation rate in 2024. (You heard it here first, folks.)

Assuming you cover these drugs for weight loss, how you manage them will have a significant impact on not just your drug costs, not just your healthcare costs, but actually your entire compensation costs. In turn, private sector companies could feel a margin squeeze of about 1%, as this link shows.

You might say: “Well, we covered them in 2023 and costs didn’t go up that much.” Perhaps, but that was when these drugs were in shortage.

And also pre-Oprah. Holding back the weight loss drug coverage tide just got that much harder.

SPOILER ALERT: This is the ideal use case for Quizzify.