The best outcomes evaluator in the wellness field is Dr. Iver Juster.*

*Among the subset of males not affiliated with They Said What.

Why Dr. Juster’s Case Study Is the Best Case Study Ever Done in This Field

Chapter 2 of the HERO Guide is a great study and deserves high praise. But before we get into the salient points of what makes this absolutely the best case study analysis ever done in this field, be aware the provenance is not a coincidence. Dr. Juster is very skilled at evaluation. Indeed he was the first person to receive Critical Outcomes Report Analysis (CORA) certification from the Disease Management Purchasing Consortium. (Dr. Juster very graciously shares the credit, and as described in his comments below would like to be listed as “the organizer and visible author of a team effort.”)

Note: the CORA course and certification are now licensed for use by the Validation Institute, which has conferred honorary lifetime certification on Iver gratis, to recognize his decades of contribution to this field. (Aside from the licensure, the Validation Institute is a completely independent organization from DMPC, from They Said What, and from me. It is owned by Care Innovations, an subsidiary of Intel. If you would like to take the CORA Certification course live, it is being offered next in Philadelphia on March 27. You can take it online as well.)

Early in the chapter, Iver lists and illustrates multiple ways to measure outcomes. He dutifully lists the drawbacks and benefits of each, but, most importantly, notes that they all need to be plausibility checked with an event-rate analysis, which he provides a detailed example of–using data from his own work. In an event rate analysis, wellness-sensitive medical events are tracked over the period of time in question.

Wellness has never been shown to have a positive impact on anything other than wellness-sensitive events. Consequently, there is no biostatistical basis for crediting, for example, “a few more bites of a banana” with, to use our favorite example, a claimed reduction in cost for hemophilia, von Willibrand’s Disease and cat-scratch fever.

By contrast, real researchers, such as Iver, link outcomes with inputs using a concept called attribution, meaning there has to be a reason logically attributable to the intervention to explain the outcome. it can’t just a coincidence, like cat scratch fever. As a result, he is willing to attribute only changes in wellness-sensitive medical events to wellness.

Event-Rate Plausibility Analysis

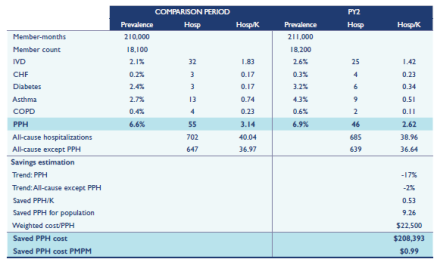

Event rates (referred to below as “PPH” or “potentially preventable hospitalizations”) are laid out by disease on page 22 of the HERO Report. Note the finding that PPH are a small fraction of “all-cause hospitalizations.” Though the relative triviality of the magnitude of PPH might come as a surprise to people who have been told by their vendors that wellness will solve all their problems, Iver’s hospitalization data sample is representative of the US as a whole for the <65 population, in which chronic disease events are rare in the <65 population.

Gross savings total $0.99 per employee per month. This figure counts all events suffered by all members, rather than excluding events suffered by non-participants and dropouts. Hence it marks the first time that anyone in the wellness industry had included those people’s results in the total outcomes tally — or even implicitly acknowledged the existence of dropouts and non-participants. He also says, on p. 17:

For example, sometimes savings due to lifestyle risk reduction is calculated on the 20% of the population that supplied appropriate data. It’s assumed that the other 80% didn’t change but if some of the people who didn’t supply risk factor data worsened, and people who got worse were less likely to report their data, that model would overestimate savings.

Note that the PPH declined only in cardiac (“IVD”) and asthma. Besides the event rates themselves being representative of the employed population in the US as a whole as a snapshot, the observed declines in those event rates are almost exactly consistent with declines nationally over that same period. This decline can be attributed to improvements in usual care, improvements that are achieved whether or not a wellness program was in place. The existence and magnitudes of the declines, coupled with the slight increase in CHF, diabetes and COPD combined (likewise very consistent with national trends), also confirm that Iver’s analysis was done correctly. (Along with attribution, in biostatistics one looks for independent confirmation outside the realm of what can be influenced by the investigator.)

It is ironic that Ron Goetzel says: “Those numbers are wildly off…every number in that chapter has nothing to do with reality” when I have never, ever seen a case study whose tallies — for either total events or event reduction, let alone both — hewed closer to reality (as measured by HCUP) than this one.

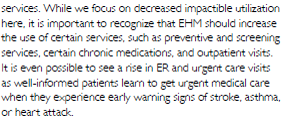

Another factor that conveniently gets overlooked in most wellness analyses is that costs other than PPHs rise. By contrast, Iver is the first person to acknowledge that:

The implication, of course, is that increases in these costs could exceed the usual care-driven reductions in wellness-sensitive medical events. Indeed, Iver’s acknowledgement proved prescient when Connecticut announced that its wellness program made costs go up.

The $0.99 gross savings, and Connecticut’s healthcare spending increase, exclude the cost of the wellness program itself, of course. Factor in Ron Goetzel’s recommendation of spending $150/year for a wellness program and you get some pretty massive losses.

The old Al Lewis would close by making some reference to the dishonesty and cluelessness of the Health Enhancement Research Organization’s board. The new Al Lewis will do just the opposite. In addition to congratulating Iver Juster (and his co-author, Ben Hamlin) on putting this chapter together, I would like to congratulate the Health Enhancement Research Organization, for what Iver describes as the “team effort” in publishing it — HERO’s first flirtation, however fleeting and inadvertent, with integrity and competence.

Iver Juster Comments on the article

Iver reviewed this article and would like to add several points. I am only adding a couple of my own points, noted in indented italics:

- It’s important to credit the work to a larger group than just myself. I was the ‘lead author’ on the financial outcomes chapter of the HERO/PHA measurement guide, but the work entailed substantial planning and review in collaboration with the chapter’s coauthor (Ben Hamlin from NCQA) and members of the group dedicated to the chapter (as well as the HERO/PHA authoring group as a whole).

- Yes, I am more than happy to credit the entire group with this study, especially Ron Goetzel, Seth Serxner and Paul Terry.

- Nonetheless the work does reflect my perspective and approach on the topic – the important points being (a) select metrics that are impactible by the intervention or program; (b) be transparent about the metric definitions and methodology used to measure and compare the; (c) assiduously seek out potential sources of both bias and noise (in other words, exert the discipline of being curious, which is greatly aided by listening to others’ points of view); (d) understand and speak to the perspective of the study—payer, employee/dependents, clinician/healthcare system, society.

- Be particularly sensitive to the biologically-plausible timeframes in which your outcomes ought to occur, given the nature of the program. Even if optimally implemented with optimal uptake and adherence, we might expect ‘leading indicators’ like initial behavior changes to improve quickly; program-sensitive biometrics (lipids, A1C, blood pressure, BMI) and medication adherence to change in a matter of months; and a few program-sensitive ER/inpatient visits (like worsening heart failure or asthma/COPD exacerbations) to improve within several months (again, assuming the program is designed to address the causes of these events). Longer-term events like kidney failure, heart attack and stroke and retinopathy take much longer to prevent partly because they require sustained healthy behavior, and partly due to the underlying biology.

- This is one excellent reason that the measured event rate decline mirrored the secular decline in the US as a whole over the period, meaning the program itself produced no decline over that period. Possibly they might decline in future years if Iver is correct. Ron Goetzel would take issue with Iver’s assertion — Ron says risk factors decline only 1-2% in 2-3 years.

- Event rate measurement in any but the largest Commercially-insured populations is subject to considerable noise. Though a challenge, estimating ‘ confidence intervals should at least shed light on the statistical noisiness of your findings.

- No need this time because your results hewed so closely to secular trend, reflecting the quality of the analysis.

- It is very likely that the program used in the illustration did affect more than the events shown because it was a fairly comprehensive population health improvement initiative. For example, ER visits were not counted; and collateral effects of ‘activation’ – a very key component of wellness – were not included in this analysis. Assuming the 99 cents is an accurate reflection of the program’s effect on the events in the chart, I’d be willing to increase the actual claims impact by 50 to 75%.

- If your speculation is accurate, that would increase gross savings to $1.49 to $1.73/month–before counting preventive care increases indicated on Page 22.

- Nonetheless, to get effect from an effective program you have to increase both the breadth (number of at-risk people) and depth (sustained behavior change including activation) – but at a cost that is less than a 1:1 tradeoff to the benefit. In other words, you must increase value = outcomes per dollar. This cannot be done through incentives alone – as many researchers have shown, if it can be done at all, it must be the result of very sustained, authentic (no lip service!) company culture.

- We are beginning to pay attention to other potential benefits of well-designed, authentic employee / workplace wellness programs (of which EHM is a part) on absenteeism, presenteeism, employee turnover and retention – and, importantly, company performance (which is after all what the company is in business to do). It’s early days but it’s possible research will show that companies that are great places to work and great places to have in our society will find financial returns that far outstrip claims savings. The jury’s still out on this important topic but let’s help them deliberate transparently and with genuine curiosity.

- Did Ron really say you have to spend $150 per year PER MEMBER on a wellness program? I’d be thinking a few dollars (unless he’s including participation incentives)

- (1) Yes, he did say that; (2) no, he’s not including participation incentives; and (3) welcome to my world.

He’s being very nice to them considering how much they dissed his study.

LikeLike

He is a very gracious guy, and I don’t think he realized their reaction once they realized that the study — precisely because it was done correctly — showed wellness loses money. They should have noticed this during their many reviews, before it went to print, so they could have put the kibosh on the whole thing and substitute some of their own propaganda. Too late now. 🙂

LikeLike

Perfect because you go high but still manage to diss the clowns who run HERO by pointing out they were too stupid to notice how this chapter undermined their whole fiction.

LikeLike